By OpenAI's own testing,live show sex video its newest reasoning models, o3 and o4-mini, hallucinate significantly higher than o1.

First reported by TechCrunch, OpenAI's system card detailed the PersonQA evaluation results, designed to test for hallucinations. From the results of this evaluation, o3's hallucination rate is 33 percent, and o4-mini's hallucination rate is 48 percent — almost half of the time. By comparison, o1's hallucination rate is 16 percent, meaning o3 hallucinated about twice as often.

SEE ALSO: All the AI news of the week: ChatGPT debuts o3 and o4-mini, Gemini talks to dolphinsThe system card noted how o3 "tends to make more claims overall, leading to more accurate claims as well as more inaccurate/hallucinated claims." But OpenAI doesn't know the underlying cause, simply saying, "More research is needed to understand the cause of this result."

OpenAI's reasoning models are billed as more accurate than its non-reasoning models like GPT-4o and GPT-4.5 because they use more computation to "spend more time thinking before they respond," as described in the o1 announcement. Rather than largely relying on stochastic methods to provide an answer, the o-series models are trained to "refine their thinking process, try different strategies, and recognize their mistakes."

However, the system card for GPT-4.5, which was released in February, shows a 19 percent hallucination rate on the PersonQA evaluation. The same card also compares it to GPT-4o, which had a 30 percent hallucination rate.

In a statement to Mashable, an OpenAI spokesperson said, “Addressing hallucinations across all our models is an ongoing area of research, and we’re continually working to improve their accuracy and reliability.”

Evaluation benchmarks are tricky. They can be subjective, especially if developed in-house, and research has found flaws in their datasets and even how they evaluate models.

Plus, some rely on different benchmarks and methods to test accuracy and hallucinations. HuggingFace's hallucination benchmark evaluates models on the "occurrence of hallucinations in generated summaries" from around 1,000 public documents and found much lower hallucination rates across the board for major models on the market than OpenAI's evaluations. GPT-4o scored 1.5 percent, GPT-4.5 preview 1.2 percent, and o3-mini-high with reasoning scored 0.8 percent. It's worth noting o3 and o4-mini weren't included in the current leaderboard.

That's all to say; even industry standard benchmarks make it difficult to assess hallucination rates.

Then there's the added complexity that models tend to be more accurate when tapping into web search to source their answers. But in order to use ChatGPT search, OpenAI shares data with third-party search providers, and Enterprise customers using OpenAI models internally might not be willing to expose their prompts to that.

Regardless, if OpenAI is saying their brand-new o3 and o4-mini models hallucinate higher than their non-reasoning models, that might be a problem for its users.

UPDATE: Apr. 21, 2025, 1:16 p.m. EDT This story has been updated with a statement from OpenAI.

CES 2024: Razer and Lexus made a gamer car

CES 2024: Razer and Lexus made a gamer car

Yokoyama Re

Yokoyama Re

Little Tokyo Historical Society to Release Biography of Civil Rights Leader Sei Fujii

Little Tokyo Historical Society to Release Biography of Civil Rights Leader Sei Fujii

Troop 378 Announces Four New Eagle Scouts

Troop 378 Announces Four New Eagle Scouts

38 TV shows we can't wait to see in 2024

38 TV shows we can't wait to see in 2024

Mainland deeply concerned about earthquake in southern Taiwan: spokesperson

Mainland deeply concerned about earthquake in southern Taiwan: spokesperson

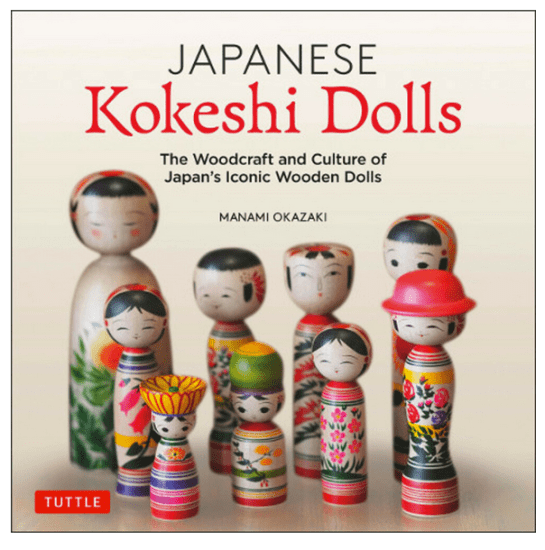

Book Explores Craft and Culture of Kokeshi Dolls

Book Explores Craft and Culture of Kokeshi Dolls

AAJA Condemns Racist Comments About KPIX

AAJA Condemns Racist Comments About KPIX

ESL представила аналог DPC — у DreamLeague появится второй дивизион

ESL представила аналог DPC — у DreamLeague появится второй дивизион

Japanese Scary Movie Screening on Halloween

Japanese Scary Movie Screening on Halloween

В память о Shushei игрокам в LoL раздадут скин на Грагаса

В память о Shushei игрокам в LoL раздадут скин на Грагаса

China urges Japan to stop playing tricks on Taiwan question: FM spokesperson

China urges Japan to stop playing tricks on Taiwan question: FM spokesperson

‘Setsuko’s Secret’ Discussion at JANM

‘Setsuko’s Secret’ Discussion at JANM

Mainland official visits Taiwan business people, compatriots ahead of Spring Festival

Mainland official visits Taiwan business people, compatriots ahead of Spring Festival

New York City blackouts always bring the wildest photos

New York City blackouts always bring the wildest photos

LTHS to Unveil Kame Restaurant Historical Marker

LTHS to Unveil Kame Restaurant Historical Marker

VOX POPULI: Roe vs. Wade, and Where to Look for Justice

VOX POPULI: Roe vs. Wade, and Where to Look for Justice

Double Honor for San Gorgonio’s Matt Maeda

Double Honor for San Gorgonio’s Matt Maeda

Scientists find subtle clues ancient Mars had rainy days, too

Scientists find subtle clues ancient Mars had rainy days, too

Little Tokyo Sparkle Roars Back After Hiatus

Little Tokyo Sparkle Roars Back After Hiatus

Uber CEO downplays murder of Jamal Khashoggi as 'mistake,' then backtracksTwitter wants your help figuring out how to deal with deepfakesOver 11,000 scientists from around the world declare a 'climate emergency'Screwed by AT&T's 'unlimited' plan? You could get some money soon.People are freaking out about mystery texts sent from their phonesWaymo moves its Austin team to other locationsPeople are freaking out about mystery texts sent from their phonesApple to launch AR headset in 2022 with smart glasses to follow in 2023, report claims'For All Mankind' never slows down enough to serve its clever premiseYouTube rolls out big changes to its desktop homepage Silicon Valley Twitter is brawling over coronavirus theories Major telcos will hand over phone location data to the EU to help track coronavirus Yelp and GoFundMe team up to help businesses struggling during coronavirus Google wisely cancels this year's April Fools' jokes The 65 absolute best moments from 'The Office' Google brings its Podcasts app to Apple's iOS with new redesigned look Get your Disney theme park fix with these virtual roller coaster rides HQ Trivia is actually back for real, just when we need it most This company says it knows who isn't socially distancing Koalas are being released back into the wild after Australian bushfires

1.5401s , 12524.78125 kb

Copyright © 2025 Powered by 【live show sex video】Enter to watch online.OpenAI's o3 and o4,